REAL TIME STREAMING DATA PROJECT

Context:

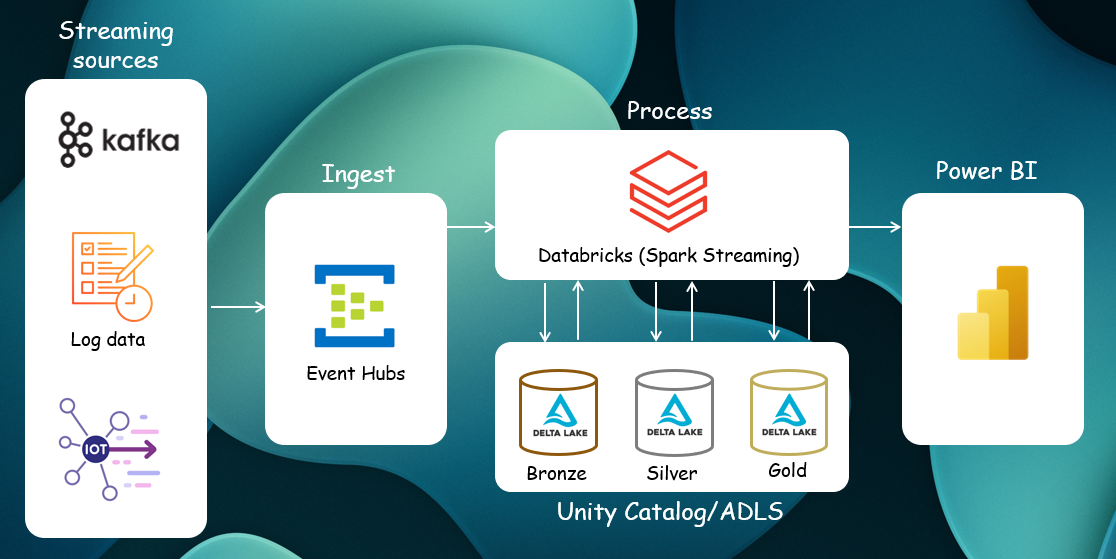

In this Real-Time Streaming Data project, I built a scalable and efficient data pipeline using Azure Event Hubs and Databricks to process streaming data in near real-time. The objective was to ingest event streams from multiple sources, including Kafka and IoT devices, into Azure Event Hubs and process them using Spark Structured Streaming in Databricks. The processed data was then stored in a Delta Lakehouse, following the Medallion Architecture (Bronze, Silver, Gold) to ensure a structured and optimized data flow.

The Bronze layer retains raw event data for traceability, the Silver layer cleans and transforms the data for structured querying, and the Gold layer aggregates and optimizes the data for advanced analytics. This structured approach ensures both flexibility and performance in handling large volumes of real-time data.

Finally, I integrated the data into Power BI using Direct Query, enabling the creation of dynamic dashboards that update in near real-time with a maximum latency of 20 minutes.

Solution Architecture

Step 1: Creating an Azure Event Hubs Resource

The first step in this project is to create an Azure Event Hubs resource within a resource group in Azure. Event Hubs serves as the ingestion layer, capable of handling high-throughput event streams in real time.

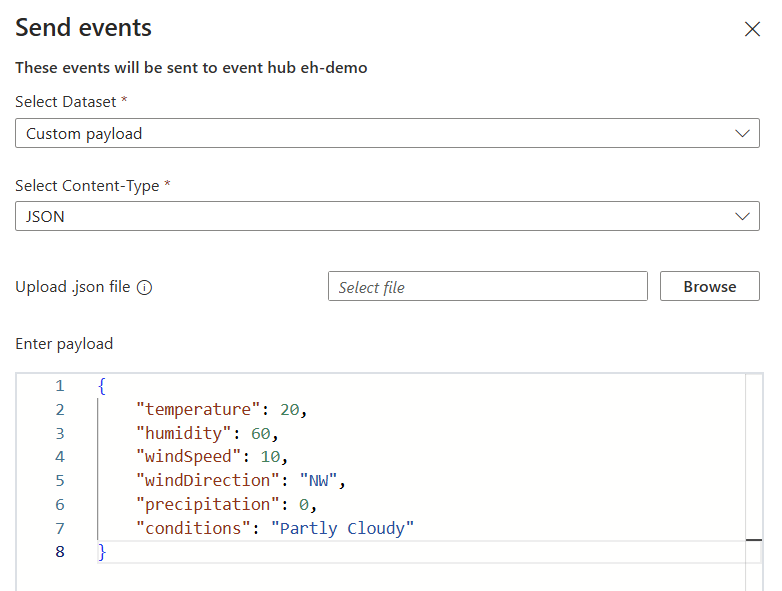

For this project, I am using synthetic data generated with the Data Explorer feature in Event Hubs. This allows me to simulate incoming events of different types. Specifically, I will send JSON-formatted events, each representing a single record structured as a key-value pair, similar to a row in a database table.

Step 2: Setting Up Azure Databricks and Connecting to Event Hubs

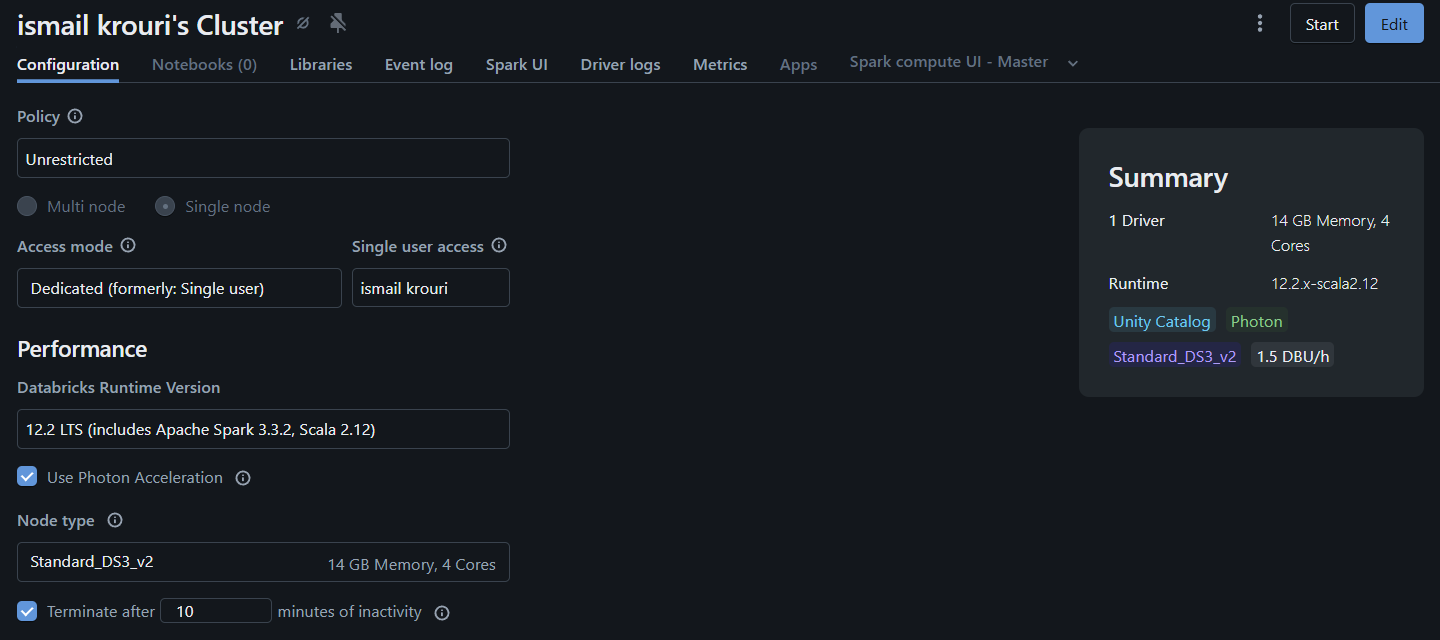

Next, I create an Azure Databricks resource in the same resource group as the previously created Event Hubs instance. Within Databricks, I set up a compute cluster to process the incoming streaming data.

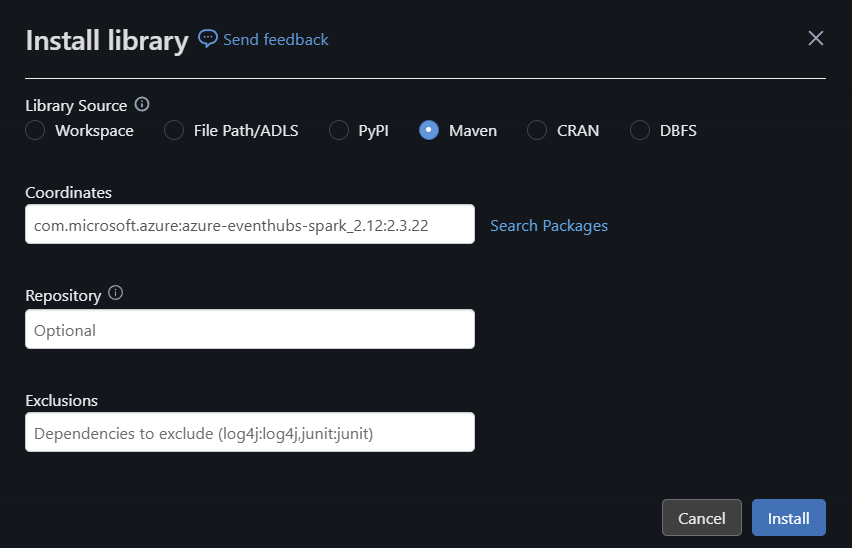

To establish a connection between Azure Databricks and Azure Event Hubs, I install the Azure Event Hubs connector library on the cluster. This library enables Spark Structured Streaming to read and process event data from Event Hubs efficiently.

Step 3: Initiating the stream and processing the bronze layer

After configuring the compute cluster, the next step is to create a Databricks notebook within my workspace. This notebook is used to execute the code required to process streaming data and store it in the Databricks Lakehouse.

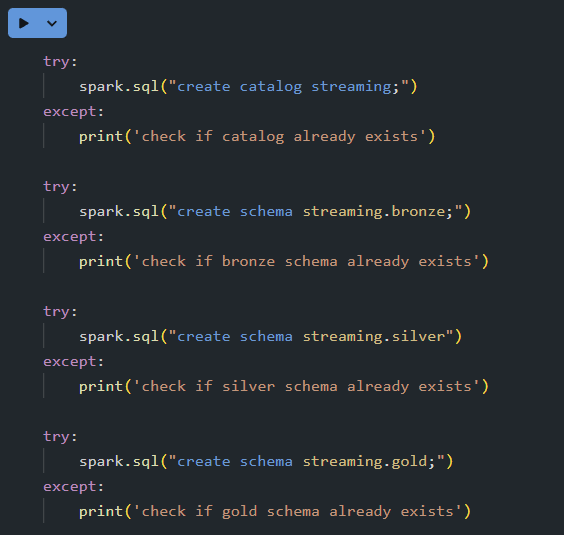

1. Implementing the Medallion Architecture in Unity Catalog

The first task is to set up the schema and catalog structure that supports the Medallion Architecture (Bronze, Silver, Gold). This ensures that data is ingested, processed, and refined in a structured way.

Once the Medallion architecture is set up in Databricks, the next step is to configure and initialize the real-time data stream from Azure Event Hubs into Databricks.

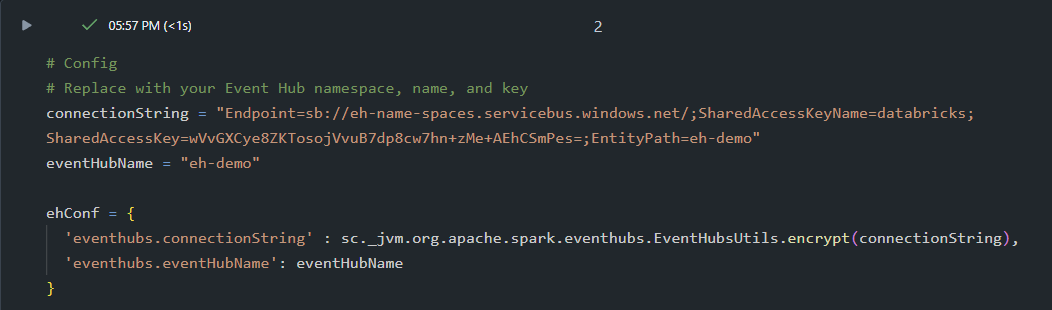

2. Configuring the Connection to Event Hubs

To establish a connection between Databricks and Azure Event Hubs, I define the necessary configuration settings, including the Event Hub namespace, name, and access key.

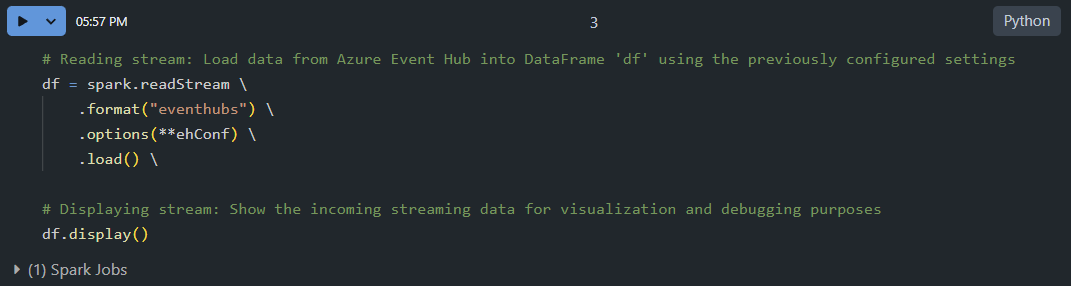

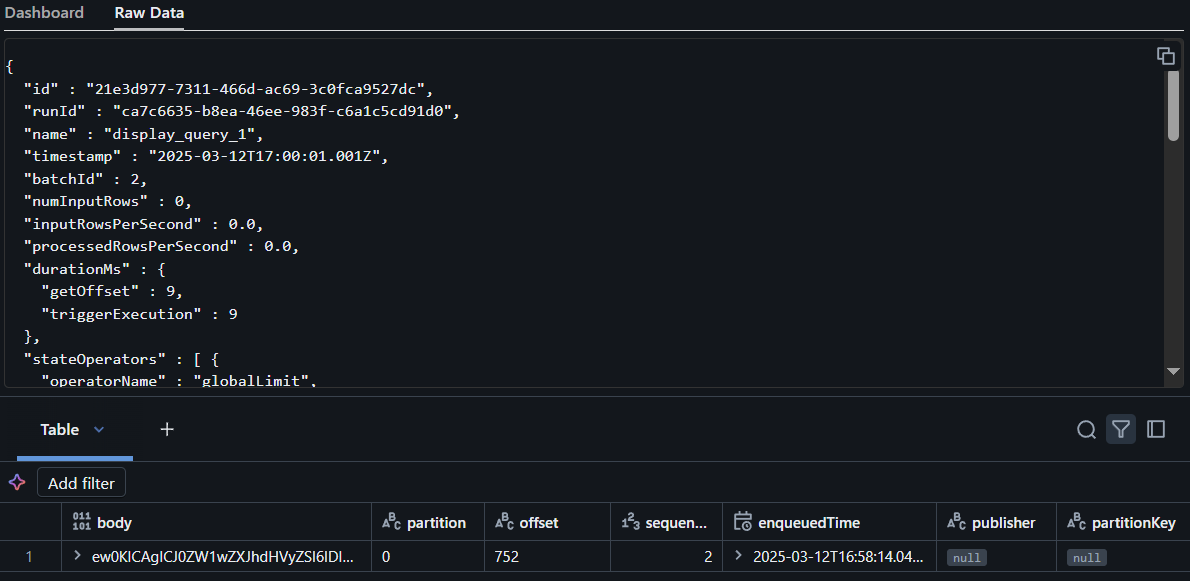

3. Initializing the Streaming Data Pipeline

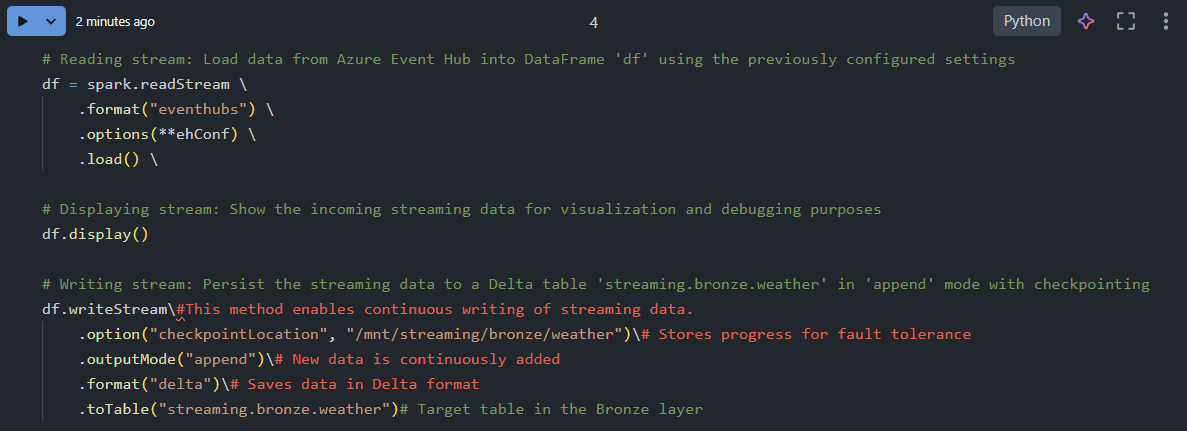

Once the connection is configured, I use Spark Structured Streaming to read and display incoming events from Event Hubs.

3. Persisting Streaming Data in Databricks Lakehouse

By default, when using Spark Structured Streaming with readStream, data is only read and stored in memory temporarily. Once the streaming process stops, the data disappears because it is not written to any persistent storage.

To solve this issue, we need to store the streaming data in a permanent location using writeStream. In this step, I persist the incoming event data into the Bronze layer of the Lakehouse in Delta format, ensuring that the data remains accessible for further processing and analysis.

Step 4: Processing the Silver layer

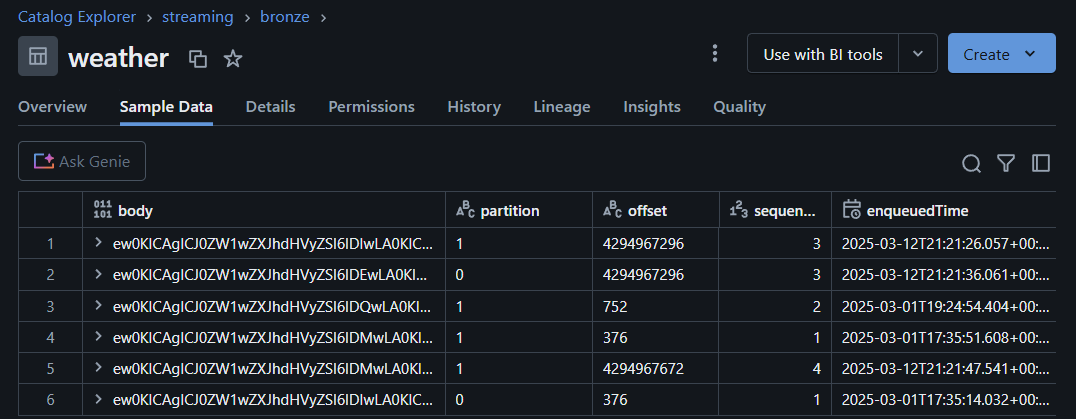

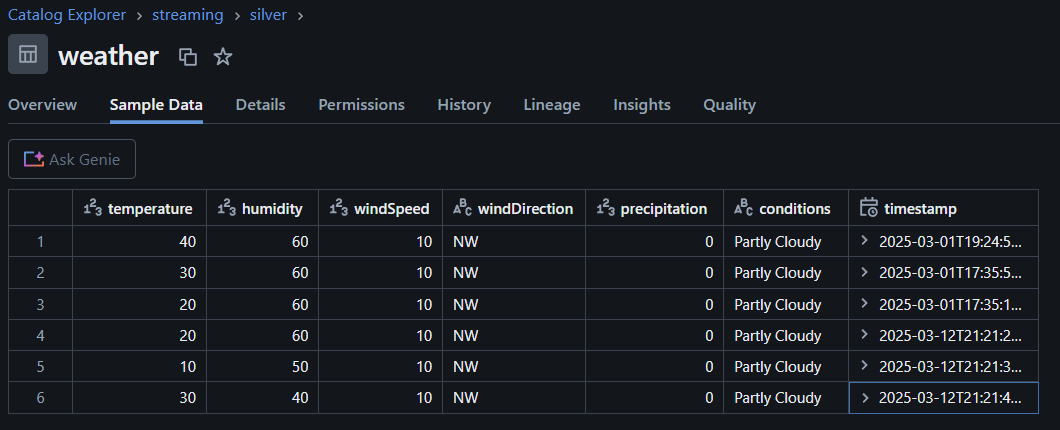

After successfully ingesting and persisting raw event data in the Bronze Layer, the next step is to process and clean the data to make it structured and usable.

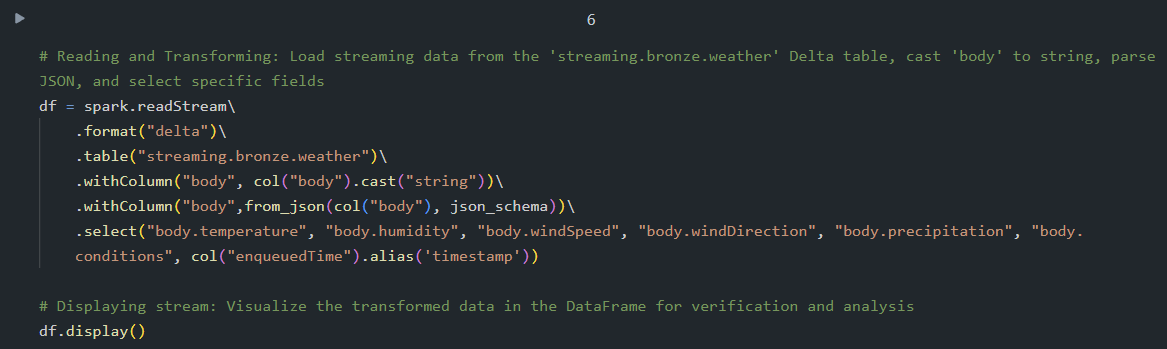

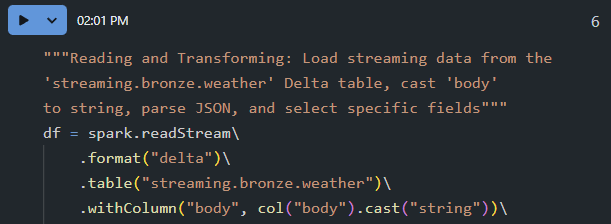

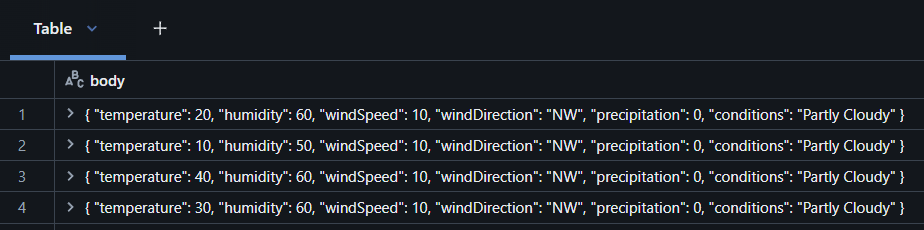

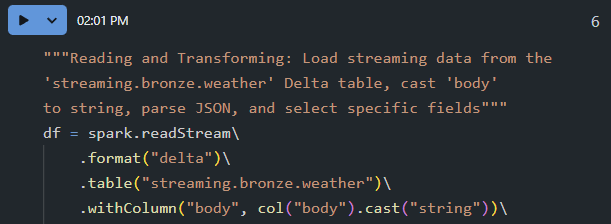

Currently, the event data is stored in the body column as binary format, making it unreadable for analysis. To make it structured and queryable, we must convert it into a string format before performing further transformations.

1. Convert Binary Data to String

The first step in processing the Silver Layer is to convert the body column from binary to string using the cast() function.

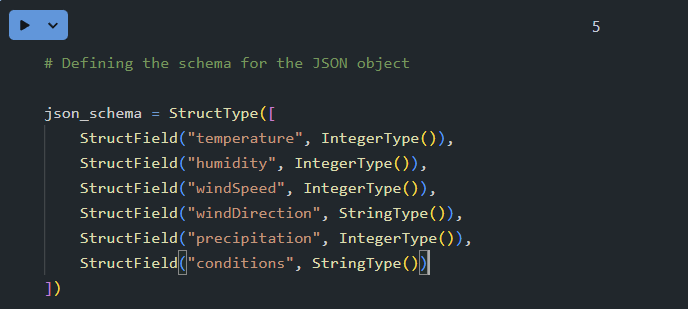

2. Transforming Data into JSON Format

After converting the body column into a string format, the next step is to adapt the data into structured JSON format. This transformation ensures that the event data is organized into key-value pairs, making it ready for further analysis and aggregation.

To properly extract and structure the JSON data, we first define a schema using StructType and StructField from PySpark.

Now, I use the predefined schema to convert the string data into a structured JSON format using from_json().

Now that the event data has been structured into JSON format, the next step is to persist it in the Silver Layer of the Databricks Lakehouse. This ensures that the data is stored reliably and ready for further analytics.

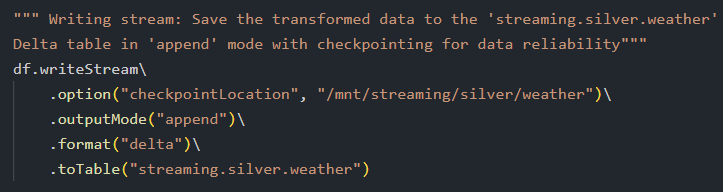

3. Writing Transformed Data to the Silver Table

I use writeStream to save the structured data into a Delta table (streaming.silver.weather). This allows for incremental data processing, ensuring that new data continuously flows into the Silver Layer without overwriting existing records.

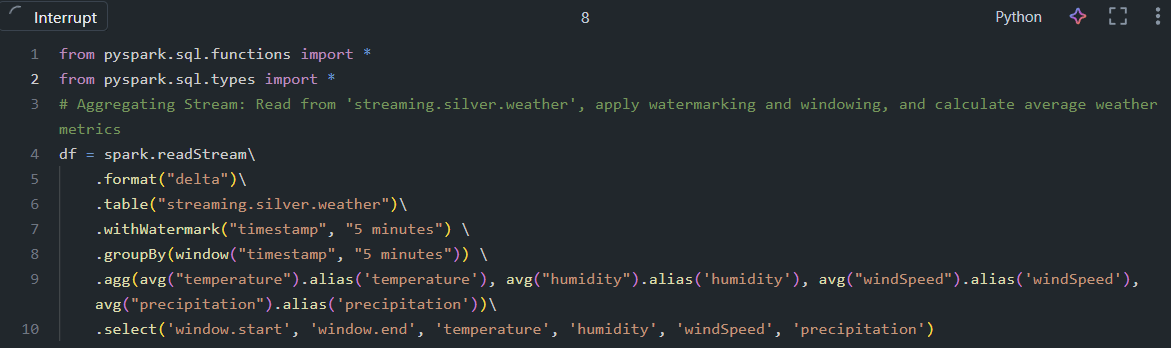

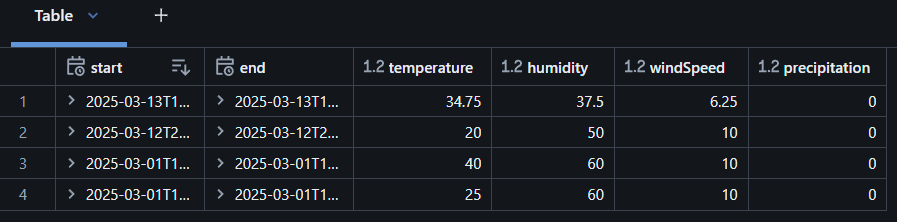

Step 5: Processing the Gold layer

Now that the data is cleaned and structured in the Silver Layer, the next step is to aggregate it over 5-minute intervals. This transformation allows me to generate summarized insights, making the data more useful for analytics and reporting in Power BI.

Once the data is aggregated, it needs to be persisted in the Gold Layer (streaming.gold.weather_summary) for efficient querying and reporting.

So far, I have built a real-time data streaming pipeline using Azure Event Hubs, Azure Databricks, and Delta Lake to process and analyze streaming weather data. We began by setting up Azure Event Hubs to ingest real-time JSON-formatted data and established a connection in Databricks to stream this data continuously. The raw data was first stored in the Bronze Layer, preserving its original format. Next, I processed and cleaned the data in the Silver Layer, converting binary event records into structured JSON and extracting key weather attributes such as temperature, humidity, and wind speed. Finally, in the Gold Layer, I aggregated the data into 5-minute intervals, calculating average values for each metric to make the dataset more suitable for reporting and visualization. With the data now structured and refined, I am ready to integrate it into Power BI to create real-time dashboards and generate meaningful insights.

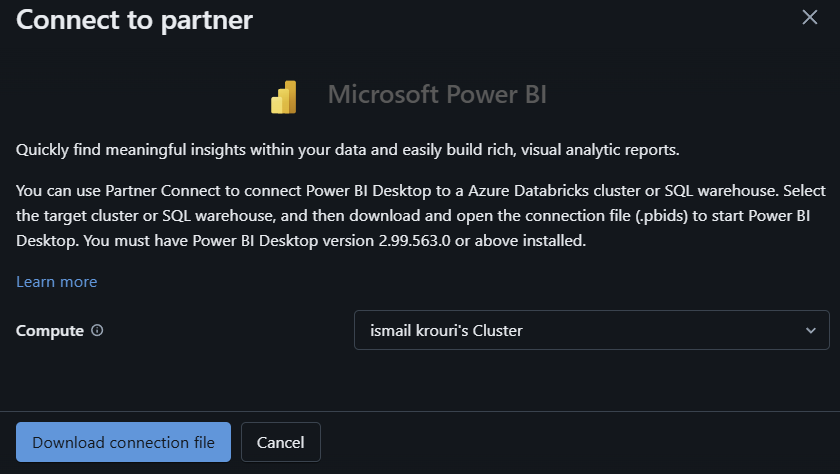

Step 7: Real-time Power BI Report

To make the data available in Power BI, I use Databricks Partner Connect, which simplifies the integration process. Through this feature, I download the Power BI Connector linked to my Databricks cluster. This connector allows Power BI to directly query the Gold Layer dataset, ensuring that the reports remain up to date with near real-time data.

This Power BI report provides an interactive visualization of streaming weather data processed through Azure Databricks. It includes two layers: the Silver Layer, which presents raw, cleaned data, and the Gold Layer, which aggregates key weather metrics into 5-minute intervals for better trend analysis. Users can filter data by time and explore temperature variations based on weather conditions (Cloudy, Partly Cloudy, Sunny). This report offers a clear and dynamic way to monitor weather trends in real time.

Summary

This project showcases a complete real-time data processing pipeline using Azure Event Hubs, Databricks, and Power BI, following the Medallion Architecture to transform raw event data into meaningful insights. The objective was to ingest, process, and visualize real-time weather data efficiently.

- Bronze Layer: The journey begins with Azure Event Hubs, where weather data is streamed in JSON format. This raw data is stored in a Delta table without modifications, ensuring that all incoming events are retained for further processing.

- Silver Layer: The raw binary data is then transformed into a structured format by parsing JSON fields and converting them into readable columns. This step ensures that the data is cleaned and structured, making it ready for analytical queries.

- Gold Layer: The cleaned data is aggregated over 5-minute intervals to generate key insights such as average temperature, humidity, wind speed, and precipitation over time. This summarization enhances the ability to detect trends and anomalies efficiently.

Finally, the processed data is made available in Power BI using Databricks Partner Connect, enabling interactive visualizations. The Power BI report provides an intuitive dashboard with filters, time-based aggregations, and condition-based analysis, allowing users to explore weather trends dynamically.

This project demonstrates the effectiveness of Azure Databricks and Delta Lake for real-time streaming and big data processing. By leveraging cloud-based event streaming, structured transformations, and advanced visualization tools, it offers a scalable and robust solution for handling continuous data streams and extracting actionable insights.